9. Transaction Management

Why do we care?

- How many users/database operations are accessing the database system at one time

- How sure are you that there won’t be any incidents affecting the data in the database? e.g. system crash, media failure, error in program? How about sabotage?

- DBMS provides concurrency control and database recovery

Transaction Support

What is a transaction?

-

An action, or series of actions, carried out by a user or application, which reads or updates contents of a database

-

Logical unit of work on the database

-

An/The? Application program is a series of transactions with non-database processing in between

-

Transforms the database from one consistent state to another, although consistency may be violated during transaction

-

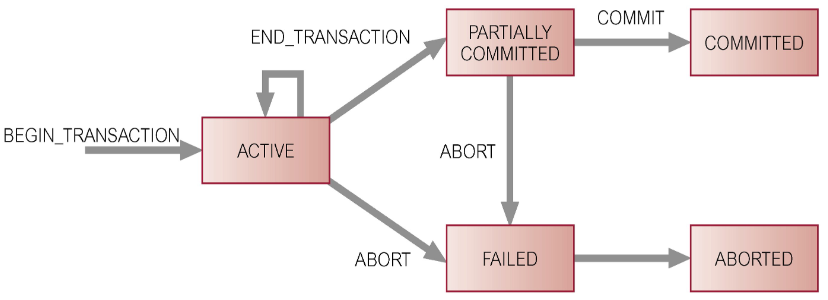

Can have one or two outcomes:

- Success – the transaction commits and the database reaches a new consistent state

- Failure – the transaction aborts, and the database must be restored to a consistent state before it is started

- Such a transaction is rolled back or undone

-

A committed transaction cannot be aborted

-

An aborted transaction that is rolled back can be restarted later

Properties of Transactions

There are four basic (ACID) properties that define a transaction:

- Atomicity – ‘All or nothing’ property

- Consistency – Must transform the database from one consistent state to another

- Isolation – Partial effects of incomplete transactions should not be visible to other transactions

- Durability – Effects of a committed transaction are permanent and must not be lost because of later

Concurrency Control

The process of managing simultaneous operations on the database without having them interfere with one another

- Prevents interference when two or more users are accessing database simultaneously and at least one is updating data

- Although two transactions may be correct in themselves, interleaving of operations may produce an incorrect result

Need for Concurrency Control

Three examples of potential problems caused by concurrency: - Lost update problem

- Uncommitted dependency problem

- Inconsistent analysis problem

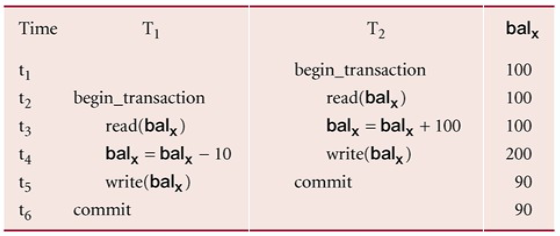

Lost Update Problems

A successfully completed update is overridden by another user

- Loss of

Uncommitted Dependency Problem

Occurs when one transaction can see intermediate results of another transaction before it has committed

- Problem avoided by preventing

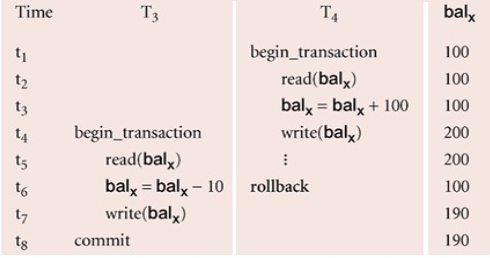

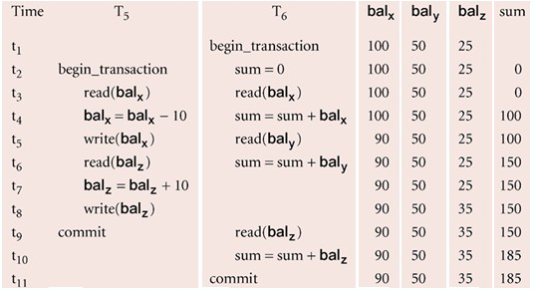

Inconsistent Analysis Problems

Occurs when a transaction reads several values but a second transaction updates some of them during the execution of the first

- Problem avoided by preventing

Serialisability

The objective of a concurrency control protocol is to schedule non-interfering transactions

- Serialisability as a means of helping to identify those executions of transactions that are guaranteed to ensure consistency, as if the transactions are run in serial execution

Why all these troubles? Recovery! - If a transaction in a schedule fails, it should be undone

- The DBMS does not test for serialisability of a schedule as the interleaving of operations is determined by the OS

- DBMS

concurrency control techniques to achieve serialisability

Concurrency Control Techniques

Two basic concurrency control techniques

- Locking

- Time-stamping

Both are conservative approaches: delay transactions in case they conflict with other transactions

Optimistic methods assume conflict is rare and only check for conflicts at commit

Locking

Transactions use locks to deny access to other transactions and to therefore prevent incorrect updates

Two types

- Shared lock for reading a data item

- Exclusive lock for both reading & writing a data item

Reads cannot conflict, so more than one transaction can hold shared locks simultaneously on the same item

Exclusive lock gives a transaction exclusive access to that item

Some systems allow transactions to upgrade a shared lock to an exclusive lock, or downgrade an exclusive lock to a shared lock

Locks are used in the following way:

- Any transaction that need to access a data item must first lock the item

- If the item is not already locked by another transaction, the lock will be granted

- If the item is locked, the DBMS determines whether the request is compatible with the existing lock. If a shared lock is requested on an item with a shared lock, the request will be granted; otherwise, the transaction must wait until the existing lock is released

- A transaction continues to hold a lock until it explicitly release it. It is only when the exclusive lock has been released that the effects of the write operation will be made visible to other transactions

Two-Phase Locking (2PL)

A transaction follows the 2PL protocol if all locking operations precede the first unlock operations in the transaction

Two phases for the transaction:

- Growing Phase – acquires all locks but cannot release any locks

- Shrinking Phase – releases the locks but cannot acquire any new locks

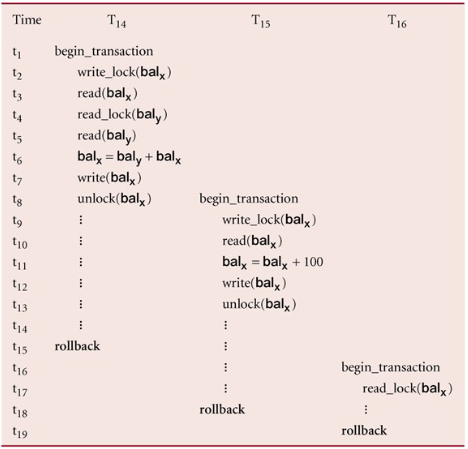

Cascading Rollback

If every transaction in a schedule follows 2PL, the schedule is serialisable

- However, problems can occur with the interpretation of when locks can be released

aborts, since is dependent of , must also be rolled back. Since is dependent on , it too must be rolled back

To prevent this with 2PL, you can: - Rigorous 2PL

Leave the release of all locks until the end of the transaction - Strict 2PL

Hold only the exclusive locks until the end of the transaction

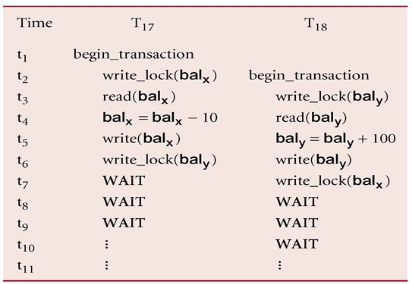

Deadlock

An impasse that may result when two (or more) transactions are each waiting for locks held by the other to be released

There is only one way to break a deadlock: Abort all but one of the transactions

A deadlock should be transparent to the user, so the DBMS should restart the transaction(s)

However, in practice the DBMS cannot restart the aborted transaction since it is unaware of transaction logic even if it was aware of the transaction history (unless there is no user input in the transaction or the input is not a function of the database state)

Three general techniques for handling a deadlock:

- Timeouts

- A transaction that requests a lock will only wait for a system defined period of time

- If the lock has not been granted within this period, the lock request times out

- In this case, the DBMS assumes the transaction may be deadlocked, even though it may not be, and it aborts and automatically restarts the transaction

- Deadlock prevention

- The DBMS looks ahead to see if the transaction would cause a deadlock and never allows a deadlock to occur

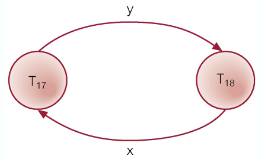

Deadlock detection and recovery

The DBMS allows the deadlock to occur but recognises it and breaks it

- The DBMS looks ahead to see if the transaction would cause a deadlock and never allows a deadlock to occur

- Usually handled by the construction of a wait-for graph (WFG) showing transaction dependencies

- Create a node for each transaction

- Create edge

if if waiting to lock an item locked by

- A deadlock exists if and only if the WFG contains a cycle

Recovery

There are several issues: - Choice of the deadlock victim

- How long has the transaction been running?

- How many data items have been updated?

- How many data items still need to be updated?

- How far to rollback a transaction?

- Avoiding starvation

- Don’t make the same transaction a victim every time!

Time-stamping

A different approach to locking, with timestamps

- Transactions are ordered globally so that older transactions will smaller timestamps, get priority in the event of conflict

- Conflict is resolved by rolling back and restarting the transaction. No locks so no deadlock

- Time-stamp

- A unique identifier that indicates the relative starting time of a transaction

- Generated by using the system clock, or a logical counter

- A read/write will proceed only if the last update on that data item was carried out by an older transaction

- Otherwise, the transaction requesting the read/write is restarted and given a new timestamp

- Also timestamps for data items:

- read_timestamp – The timestamp of the last transaction to read the item

- write_timestamp – The timestamp of the last transaction to write to the item

Basic Timestamp Ordering

Consider a transaction

is already updated by a younger (later) transaction - The transaction must be aborted and restarted with a new timestamp

- Otherwise, update

Consider a transaction

is already read by a younger transaction - Roll back the transaction and restart it using a later timestamp

is already written by a younger transaction - Roll back the transaction and restart it using a later timestamp

- Otherwise update

Strict time-stamping…

When writing or reading, the transaction must also wait for the last transaction to write to the data item to abort/commit to avoid a cascading rollback

Thomas’ Write Rule

A variation to the basic timestamp ordering protocol that increase concurrency by relaxing strict serialisability

- Thomas’ write rule is that the write can safely be ignored – ignore obsolete write rules

- When the current transaction wants to update a value that has been updated by a younger transaction, that write operation can be ignored and the current transaction does not need to be aborted

Optimistic Techniques

In some environments, conflicts are rare and it is more efficient to proceed without delays

- Checking for conflict before commit

- If there is a conflict, the transaction must be rolled back and restarted

- Three phases:

- Read

- Validation

- Write

Read Phase

Extends from the start until immediately before the commit

- The transaction reads the values from the database and stores them in local variables. Updates are applied to a local copy of the data

Validation Phase

After the read phase,

For a read-only transaction, it checks that the data it read is still the same values. If no interference, the transaction is committed, otherwise it is aborted and restarted

- For an update transaction, it checks whether the transaction leaves the database in a consistent state (adheres to rules, constraints, and integrity requirements), with serialisability maintained

Each transaction

- The start of its execution,

- The start of its validation phase,

- Its finish time,

Given transactionat and transaction at with , to pass the validation test, either:

, or , and - The set of data items written by

are not the ones read by ; and

- The set of data items written by

Write Phase

After a successful validation phase for an update transaction, the updates made to the local copy are applied to the database

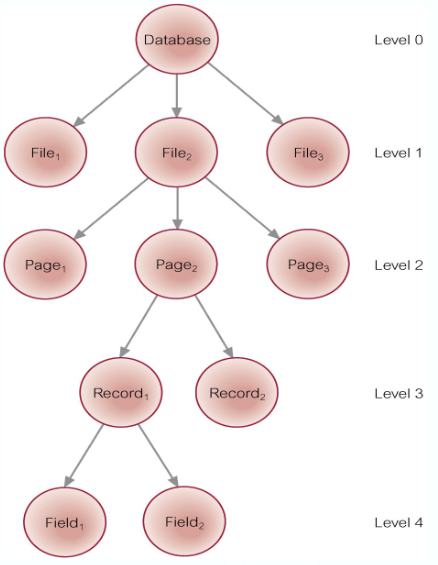

Granularity of Data Items

The size of data items chosen as the unit of protection by the concurrency control protocol

Ranging from coarse to fine:

- The entire database

- A file (relation)

- A page

- A unit of predetermined size (typically 4KB, 8KB, 16KB or more)

- A record (tuple)

- A field value of a record

Trade-off: - Coarser, the lower the degree of concurrency

- Finer, more locking information than is needed is stored

The best item size depends on the types of transactions

Hierarchy of Granularity

When a node is locked, all its descendants are also locked

- The DBMS should check the hierarchical path before granting a lock

- To reduce searching involved in locating locks, an intention lock could be used to lock all ancestors of a locked node

- Intention locks can be read/write.

Applied top-down, released bottom-up

- Intention locks can be read/write.

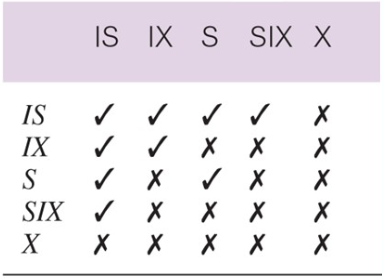

Multiple-Granularity Locking

Intention Shared (IS): explicit locking at a lower level of the tree but only with shared locks

Intention Exclusive (IX): explicit locking at a lower level with exclusive or shared locks

Shared and Intention Exclusive (SIX): the sub tree rooted by that node is locked explicitly in shared mode and explicit locking is being done at a lower level with exclusive mode locks

4 Transactions

Types of Failures

System Crashes: due to hardware/software errors, loss in main memory

Media Failure: such as head crashes or unreadable media, resulting in a loss of parts of secondary storage

Application Software Errors: such as logical errors

Natural Physical Disasters: such as fires, floods, etc…

Carelessness or Unintentional Destruction of Data or Facilities

Sabotage: intentional corruption/destruction

Transactions and Recovery

Transactions represent the basic unit of recovery

- Recovery manager responsible for atomicity and durability

- If failure occurs between a commit and the database buffers being flushed to secondary storage then, to ensure durability, recovery manager has to redo (roll-forward) the transaction’s updates

- If the transaction had not committed at failure time, recovery manager has to undo (rollback) any effects of that transaction for atomicity

Recovery Facilities

The DBMS should provide the following facilities to assist with recovery:

- Backup Mechanism – which make periodic backup copies of the database

- Logging Facilities – which keep track of the current state of the transactions and database changes

- Checkpoint Facility – which enables updates to the database in progress to be made permanent

- Recovery Manager – which allows the DBMS to restore the database to a consistent state following a failure

Log File

Contains information about all updates to the database:

- Transaction records

- Checkpoint records

Often used for other purposes (for example, auditing)

- Log file may be duplexed or triplexed (2 or 3 copies)

- The log file is sometimes split into two separate random-access files

- Potential bottleneck; critical in determining overall performance

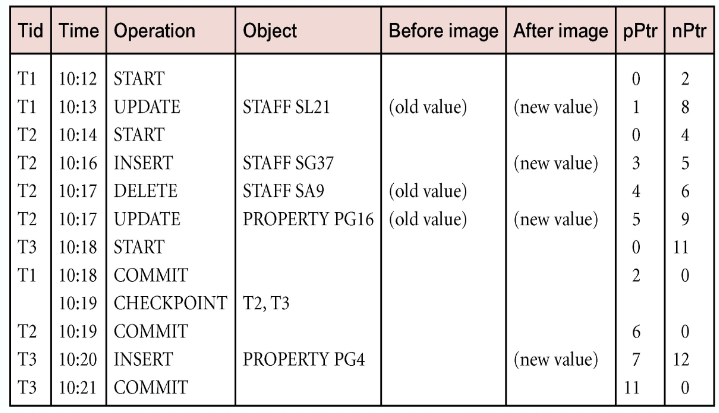

Checkpointing

- Checkpoint – the point of synchronisation between database and log file. All buffers are force-written to secondary storage

- A checkpoint record is created containing identifiers of all active transactions

- When failure occurs, redo all transactions that committed since the checkpoint and undo all transactions active the time of the crash

Recovery Techniques

If the database has been damaged:

- Need to restore the last backup copy of the database and reapply updates of the committed transactions using the log file

If the database is only inconsistent: - Need to undo all changes that caused inconsistency. May also need to redo some transactions to ensure the updates reach secondary storage

- Do not need the backup, but can restore the database using before- and after-images in the log file

- Thee main recovery techniques:

- Deferred update – till commit

- Immediate update – immediately

- Shadow Paging – two pages